Table of Contents

Cache Mapping Techniques

Cache Mapping Techniques are the methods that are used in loading the data from main memory to cache memory.

Problem-based on memory mapping techniques in computer architecture is generally asked in GATE (CS/IT) and UGC NET examination.

GATE 2022 aspirants are requested to read this tutorial till the end. After reading this tutorial, you will be able to solve the problems based on mapping in cache memory.

Today in this cache memory mapping techniques based tutorial for the GCSE Exam, we will learn about a different type of Cache Memory Mapping Techniques. These techniques are used to fetch the information from the main memory to cache memory. Cache Mapping Technique Examples are also explained in this tutorial.

Frequently Asked Questions

After reading this tutorial, students will be able to answer the following frequently asked questions

- What is cache memory?

- what is cache memory mapping technique?

- What is direct mapping?

- What is the associative mapping technique?

- What d do you understand by set-associative memory mapping?

- What are the differences between different cache memory mapping techniques?

- What tag, Block, and word field represent in logical address?

- What is Set field of logical address?

- How can we improve the performance of the cache?

There is three types of mapping techniques used in cache memory. Let us see them one by one. Three types of mapping procedures used for cache memory are as follows –

What is Cache Memory Mapping?

Cache memory mapping is a method of loading the data of the main memory into the cache memory. In more technical sense content of the main memory is brought into cache memory which is referenced by the CPU.

There are three mapping techniques of cache memory, which are explained in this post.

- Direct Mapped Cache.

- Associative Mapping Technique.

- Set Associative Mapping Technique.

Let’s Understand each Cache Mapping Technique one by one.

(1) Associative Mapping Technique

The fastest and most flexible computer used associative memory. The associative memory has both the address and memory word.

In the associative mapping technique, any memory word from the main memory can be store at any location in cache memory.

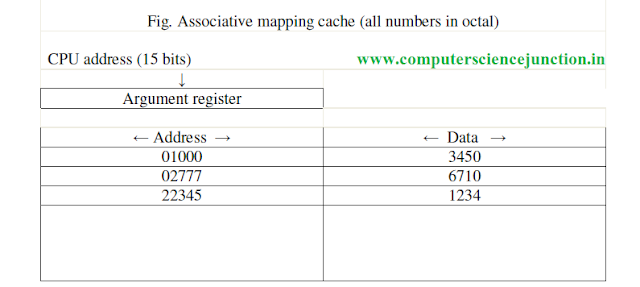

The address value of 15 bits is shown as a five-digit octal number, and its corresponding 12-bit word is shown as a four-digit octal number, and its corresponding 12-bit word is shown as a four-digit octal number.

CPU generated 15 bits address is placed in the argument register and the associative memory is searched for a matching address.

If the address is present in cache then corresponding 12-bit data is read from it and send to CPU.

But If no match occurs for that address, in that case required word is accessed from the main memory, after that this address-data pair is sent to the associative cache memory.

Suppose that cache is full then question arises that where to store this address-data pair. In this condition this concept of replacement algorithms comes into existence.

Suppose that cache is full then question arises that where to store this address-data pair. In this condition this concept of replacement algorithms comes into existence.

Replacement algorithm determines that which existing data in cache is removed from cache and make a space free so that required data can be placed in cache.

A simple procedure is to replace cells of the cache is round-robin order whenever a new word is requested from main memory. This constitutes a first-in first-out (FIFO) replacement policy.

(2) Direct Cache Mapping Technique

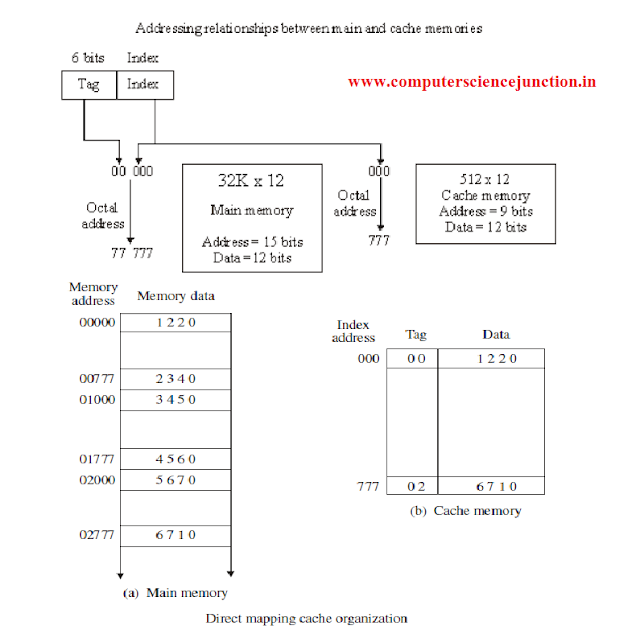

Associative memories are more costly as compared to random-access memory because of logic is added in with each cell. The 15 bits address generated by the CPU is divided into two fields.

The nine lower bits represents the index field and the remaining six bits form the tag field. The figure shows that main memory required an address that includes tag and the index bits.

The index field bits represent the number of address bits required to fetch the cache memory.

Consider a case where there are 2K words in Cache Memory and 2n words in main memory.

The n bit memory address is divided into two fields: k bits for the index field and the n-k bits for the tag field. The direct mapping cache organization uses the n-k bits for the tag field.

- In this direct mapped cache tutorial it is also explained the direct mapping technique in cache organization uses the n bit address to access the main memory and the k-bit index to access the cache. The internal arrangement of the words in the cache memory is as shown in figure.

- It has been shown in cache that each word in cache consists of the data and tag associated with it. When a new word is loaded into the cache, then its tag bits are also stored alongside with the data bits. When the CPU generates a memory request, the index field is used for the address to access that cache.

- The tag field of the address referred by the CPU is compared with the tag in the word read from the cache. If these two tags match it means that there is a cache hit and the desired data word is available in the cache.

- If these two tags does not match then there is a miss and the required word is not present in cache and it is read from main memory. It is then stored in the cache memory along with the new tag.

- The disadvantage of direct mapping technique is that the hit ratio can drop considerably if two or more words whose addresses have the same index but different tags are accessed repeatedly.

To see how the direct-mapping technique operates, consider the numerical example as shown. The word at address zero is presently stored in the cache with it’s index = 000, tag = 00, data = 1220.

Assume that the CPU now wants to read the word at address 02000. Since the index address is 000, so it is used to read the cache and two tags are then compared.

Here we found that the cache tag is 00 but the address tag is 02 these two tags do not match, miss occurs. So the main memory is accessed and the data word 5670 is sent to the CPU. The cache word at index address 000 is then replaced with a tag of 02 and data of 5670.

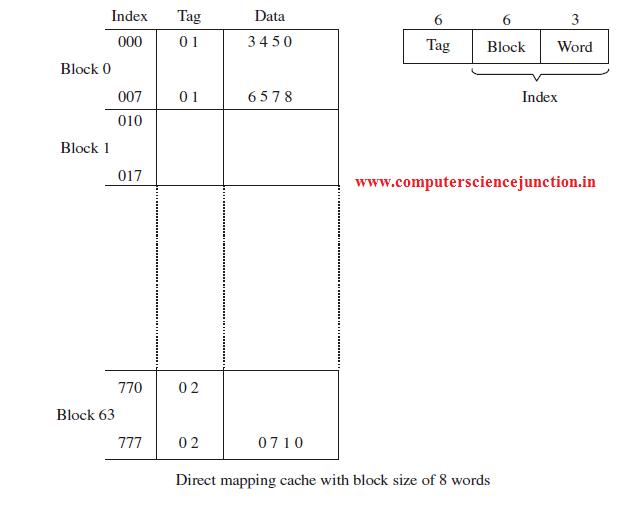

First part of the index filed is the cache block field and second is the word field. In a 512-word cache there are 64 cache blocks and size of each cache Block is 8 words.

Since there are 64 blocks in cache so 6 bits are used to identify a cache block within 64 cache blocks. So 6 bits are used to represent the cache block field and size of each cache Block is 8 words so 3 bits are used to identify a word among these 8 words.

(3) Set Associative Mapping Technique

Disadvantage of direct mapping techniques is that it required a lot of comparisons.

A third type of cache organization, called set associative mapping, is an improvement over the direct-mapping organization in that in set associative mapping technique.

In this technique each data word is stored together with its tag field and the number of tag data items in one word of cache is said to form a set.

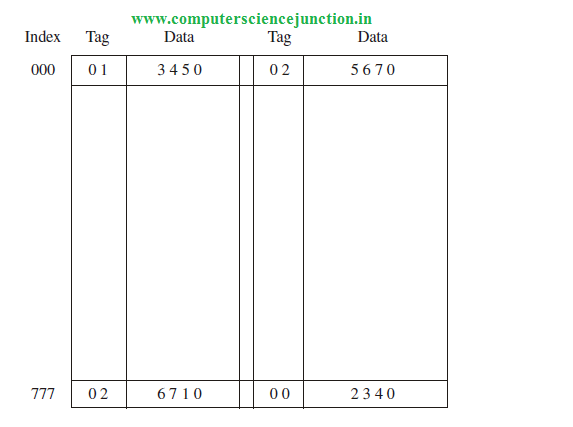

Here I have explained the concept of set associative memory with the help of an example. An example of a set associative cache is shown in figure. Each index address has two parts data words and their associated tags.

6 bits are required to represent the tag field and 12 bits are required to represent the word field.

Word length is 36 bits. Here an index 9 bits index address can have 512 words. So cache memory size is 512 x 36.

It can accommodate 1024 words of main memory since each word of cache contains two data words. In general, a set-associative cache of set size K will accommodate K words of main memory in each word of cache.

When the CPU generates a logical address to fetch a word from main memory then the index value of the address is used to access the cache.

The tag field of the CPU generated address is then compared with both tags in the cache to determine whether they match or not.

The comparison logic is performed by an associative search of the tags in the set similar to an associative memory search so it is named as “set-associative.”

We can improve the cache performance of cache memory if we can improve the cache hit ratio and the cache hit ratio can be improve by improving the set size increases because more words with the same index but different tags can reside in cache.

When a miss occurs in a set-associative cache and the set if full, it is necessary to replace one of the tag-data items with a new value using cache replacement algorithms.

Also read: Different Cache levels and Cache Performance

Conclusion and Summary

I hope this Computer Science Study Material for Gate will be beneficial for gate aspirants. This cache mapping techniques based tutorial will be helpful for GATE aspirants to solve the GATE exam questions based on cache mapping techniques.

In this cache mapping techniques tutorial we have explained each mapping techniques with an example. I hope that this tutorial will be beneficial for computer science student.

I kindly request to readers please give your feedback and suggestion. If you find any mistake in this tutorial then comment.

Don’t stop learning and practice.

All the Best !!